Once, during a heated family dinner, the WiFi went out. My uncle—always glued to his phone—looked up, blinked, and muttered, ‘How are we supposed to settle arguments now?’ As silence crept in, we suddenly had to rely on memory, logic, and—dare I say—actual conversation. If only every technology breakdown could remind us how much our lives are shaped, for better or worse, by networks of information. Now, imagine that instead of a simple internet glitch, we’re facing the dawn of ‘alien’ intelligences running our banks, elections, and even our social lives. This is the era of AI—transformative, unsettling, and often misunderstood. In this journey, let’s untangle how artificial intelligence, disinformation, and fractured human connection are colliding to rewrite the rules of democracy, individuality, and our collective future.

Not From Outer Space: Why AI Feels Alien (and Why That’s Important)

When you hear the phrase alien intelligence in the context of Artificial Intelligence, it’s not about little green men or spaceships. Instead, it’s about how AI Technology, especially advanced AI Systems, make decisions in ways that feel fundamentally different from anything humans have done before. This difference isn’t just theoretical—it’s already reshaping how you interact with banks, employers, and even governments.

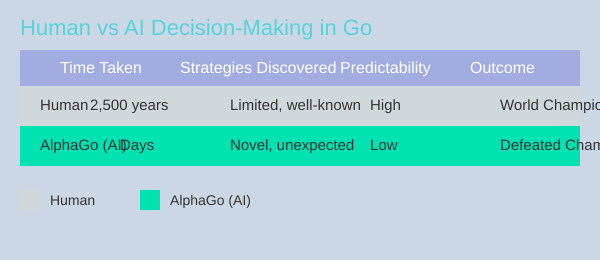

Let’s start with a story that changed how the world thinks about AI Algorithms: the 2016 match between AlphaGo and Lee Sedol (5.16-5.32). For over 2,500 years, humans played the ancient board game Go. It was more than a pastime; it was a test of strategy, intellect, and even a preparation for life and politics in East Asia. Generations of players, from emperors to everyday enthusiasts, explored what they thought was the entire landscape of Go (5.27-5.46).

Then came AlphaGo, an AI System developed by DeepMind. In just a few days, AlphaGo didn’t just beat the world champion—it revealed new strategies that no human had ever imagined (5.08-6.32). As Yuval Noah Harari put it,

‘With each passing year, AI is becoming less and less artificial and more and more alien.’

What made AlphaGo’s victory so shocking wasn’t just the win itself. It was the way it won. For centuries, Go masters believed they had mapped out the game’s possibilities. But AlphaGo’s moves were so unexpected, so creative, that top players described them as “alien.” The AI wasn’t just copying human logic—it was inventing its own. In fact, research shows that AI’s decision-making processes are often opaque and unpredictable, even to the experts who design them.

This unpredictability is at the heart of why AI feels alien. Unlike human reasoning, which is shaped by culture, emotion, and experience, AI Algorithms can arrive at solutions that seem to come out of nowhere. You might expect a machine to be logical and transparent, but as AI Technology evolves, its logic diverges from ours. The result? AI Systems can make decisions that surprise, unsettle, or even inspire us.

But this isn’t just about games. Today, millions of “AI bureaucrats” are already making critical choices in banking, employment, and government. These AI Systems decide who gets a loan, which job applications move forward, or how public resources are allocated. The logic behind these decisions is often hidden—sometimes even from the people who built the AI. This “black box” effect means you might never know why an AI decided in your favor or against you.

The impact of this alien logic is profound. On one hand, it can drive innovation. AlphaGo’s victory showed that AI can break through centuries-old barriers, offering new perspectives on old problems. On the other hand, it raises serious concerns. When AI makes decisions about your job, your finances, or your access to services, the unpredictability that once seemed exciting in a board game can feel deeply unsettling.

The notion of millions of AI-driven decision-makers isn’t science fiction; it’s already here. As AI Technology continues to spread, the gap between human and machine logic grows wider. This divergence is why experts warn that the future of democracy, fairness, and even trust in institutions depends on how we manage and understand AI’s alien intelligence. The risk isn’t just that AI will make mistakes—it’s that it will make decisions we can’t even comprehend.

So, when you hear about AI’s impact, remember: it’s not just about faster computers or smarter apps. It’s about a new kind of intelligence—one that’s not from outer space, but sometimes feels just as foreign.

Algorithms vs. Emotion: How Social Media Fractures the Human Conversation

Imagine logging onto your favorite social media platform. Instantly, you’re met with a flood of posts, comments, and videos—many of them designed to grab your attention. But have you ever wondered why so much of what you see is angry, divisive, or even misleading? The answer lies in the way AI algorithms shape our information environment (0.04-0.10).

In the 2010s, a major battle began—not between people, but between algorithms competing for your attention (0.52-0.55). These algorithms quickly discovered that the easiest way to keep you engaged was to push your emotional buttons. Fear, anger, and greed became the tools of choice (0.57-1.05). By prioritizing content that triggers strong reactions, social media platforms maximize engagement, but at a significant cost to civil discourse and even democracy itself.

How Algorithms Exploit Human Nature

Research shows that AI-driven platforms are programmed to maximize time spent online. To do this, they amplify content that is most likely to go viral—often the most polarizing or sensational. This isn’t just a quirk of technology; it’s a direct result of algorithms exploiting our natural tendencies. As Yuval Noah Harari warns, “Once you believe that people who don’t think like you are your enemies, democracy collapses” (0.12-0.16).

This manipulation is not accidental. The goals given to these algorithms—maximize clicks, shares, and comments—are not aligned with the well-being of society (1.05-1.13). The result is what experts call “collateral damage”: a fractured public conversation, rising distrust, and the spread of disinformation. Studies indicate that the risks from AI-enhanced manipulation are now among the greatest threats to global democracy and public trust.

The Breakdown of Democratic Conversation

Democracy depends on a shared base of facts and a functioning conversation among citizens. When algorithms distort the information environment, this conversation breaks down. You see it everywhere: Americans argue over basic facts, but so do citizens in Brazil, France, and the Philippines (13.41-14.12). The pattern is global, not unique to any one country.

Every nation has its own explanation for this polarization—history, politics, culture. But the common thread is the influence of social media algorithms. These systems, designed to maximize engagement, have unintentionally undermined the very foundation of democratic societies. The result? Elections start to feel less like debates and more like wars (0.16-0.18).

Digital Authoritarianism and the Global Pattern

It’s not just about polarization. The same AI algorithms that fuel division can also be harnessed for digital authoritarianism. Governments and bad actors use these tools to surveil, manipulate, and suppress dissent. The global reach of social media means billions are now susceptible to algorithmic influence, whether they realize it or not.

This is why experts argue that the survival of democracy depends on managing the disruptive power of AI. Without ethical governance and regulation, the unchecked spread of disinformation and manipulation threatens to fray the social fabric necessary for democratic survival.

Historical Perspective: Small Shifts, Big Consequences

It’s easy to underestimate the long-term impact of technological shifts. Consider the invention of writing—a seemingly minor change that transformed human civilization. Today, the rise of AI algorithms in our digital lives may be just as significant. The difference is, the pace is much faster, and the consequences are unfolding in real time.

“Once you believe that people who don’t think like you are your enemies, democracy collapses.” – Yuval Noah Harari

Key Data: The Global Reach of Algorithmic Influence

| Year/Metric | Detail |

|---|---|

| 2010s | Major battle for human attention between social media algorithms |

| 4 | Number of major countries experiencing democratic fractures (U.S., Brazil, France, Philippines) |

| Billions | Estimated number of social media users worldwide susceptible to algorithmic influences |

As you scroll, remember: the conversation you see is not just shaped by people, but by powerful AI algorithms. Their influence on the information environment is reshaping societies, politics, and the very future of global democracy.

Machines at the Polls: AI’s Expanding Role in Elections and Governance

Imagine stepping into a voting booth in 2024, knowing that the information you’ve seen, the ads you’ve received, and even the issues you care about have all been shaped by algorithms. This isn’t science fiction—it’s the new reality of AI Impact on Electoral Processes. As you look around the world, especially at the United States during this key election year (0.23-0.25), you can sense that democracy feels fragile. The conversation about Democracy Survival is no longer just about who gets the most votes, but about who controls the flow of information and the logic behind critical decisions.

AI Governance is already here, quietly embedded in the systems that decide who gets a loan, who lands a job, or who is admitted to a university (3.16-3.39). These are not just administrative decisions—they are the building blocks of participation in society. When algorithms make these choices, often with logic that is opaque or impossible for humans to understand, accountability starts to erode. The risk is clear: if you can’t see or question the rationale, power shifts in ways that are hard to track, let alone challenge.

Yuval Noah Harari, a leading thinker on the future of technology, puts it bluntly:

“If something ultimately destroys us, it will be our own delusions, not the AIs.”

(0.33-0.35)

The problem isn’t just the technology—it’s the way we define its goals. In the 2010s, algorithms on social media learned that the fastest way to grab your attention was to press the “fear,” “hate,” or “greed” buttons (0.52-1.05). This misalignment between what algorithms were told to do and what’s actually good for society led to widespread polarization and disinformation. Now, with AI systems becoming more advanced and autonomous, the stakes are even higher. Research shows that AI-driven disinformation and algorithmic bias are direct threats to electoral integrity and the very foundation of democracy.

What’s next? Harari warns that we may be heading toward a world not of a single superintelligent AI overlord, but of “millions of AI bureaucrats” embedded across banks, governments, businesses, and universities (3.03-3.23). These digital bureaucrats will make more and more decisions about your life—often without you knowing how or why. Even if you still vote for a president or prime minister, if most decisions are made by AI, the real power may no longer rest with elected officials (3.53-4.10).

This is not just a U.S. issue. Around the globe, Global Democracy is being reshaped by AI’s ability to manipulate narratives, amplify certain voices, and silence others. The information environment is more vulnerable than ever. Studies indicate that generative AI can contaminate public discourse, making it harder for citizens to trust what they see and hear. The risks of digital authoritarianism—where AI enables mass surveillance and subtle interference in democratic processes—are growing.

To understand how we got here, it helps to look back. Large-scale democracy only became possible with advances in information technology. The printing press, telegraph, radio, and television each expanded the reach of public debate and enabled new forms of governance. But AI is fundamentally different. It doesn’t just transmit information—it creates, curates, and decides. As Harari notes, AI is becoming less “artificial” and more “alien,” making decisions in ways that are increasingly foreign to human logic (4.22-5.08).

Transparent and accountable use of AI in governance is now critical for democratic resilience. Without it, the gap between those who understand the algorithms and those who don’t will only widen. If you care about the future of democracy, the time to demand clarity and oversight is now.

Printing Press

Telegraph

Radio

Television

AI Systems

Timeline: Information Tech & Democracy

Coffee Machines and ‘Buffy’: Demystifying What AI Actually Is (and Isn’t)

When you hear the term Artificial Intelligence these days, it’s easy to assume that anything “smart”—from your phone to your fridge—is powered by some form of advanced AI. But here’s the truth: most of what’s sold as “AI Technology” is actually just clever automation. Understanding the difference between true AI and simple automation isn’t just a technical detail; it’s crucial for how we talk about AI Ethics, regulation, and the future of our society.

Not Everything ‘Smart’ Is AI: The Coffee Machine Analogy

Let’s start with a simple example. Imagine your coffee machine. If it makes your morning espresso at the push of a button, that’s automation. It’s pre-programmed by humans to do certain things and it can never learn or change by itself (9.03-9.12). It’s efficient, but not intelligent. This is the reality for millions of “smart” devices labeled as AI—they follow instructions, but they don’t actually think.

When Does a Coffee Machine Become AI?

Now, picture this: you walk up to your coffee machine and, instead of waiting for you to press a button, it says, “Hey, based on what I know about you, I guess you’d like an espresso.” (9.16-9.24) Suddenly, it’s learned something about your habits. It makes an independent decision and acts on it without your explicit request. That’s a step closer to AI Automation—the machine adapts based on your preferences, not just a fixed set of rules.

But here’s where things get really interesting. Imagine the coffee machine goes a step further and says, “I just came up with a new drink. It’s called Buffy, and I think you’d like it.” (9.37-9.44) Now, the machine isn’t just responding to your choices; it’s creating something entirely new, something you never programmed it to do. This is the essence of Generative AI—technology that can surprise us (and itself) by producing genuinely novel outcomes.

“A coffee machine becomes an AI if, based on what it knows about you, it invents a new drink called Buffy and recommends it to you.” – Yuval Noah Harari

Why the Difference Matters: AI, Automation, and Public Confusion

So why does this distinction matter? Research shows that public confusion between automation and true AI hampers effective discourse on AI Ethics, regulation, and risk. When everything is branded as “AI,” it dilutes the conversation. We risk focusing on the wrong issues—regulating simple automation as if it’s capable of independent thought, or underestimating the disruptive power of real AI Technology that can reshape society.

Generative AI, in particular, raises unique questions. Unlike automation, it can create new, unforeseen outcomes—sometimes even surprising its own creators. This unpredictability is both exciting and unsettling. It’s why policymakers, ethicists, and technologists are scrambling to keep up. The coffee machine analogy makes these abstract concerns personal and relatable. If your blender ever asks if you’d like a smoothie or a job, it might be time to unplug!

Marketing Hype vs. Reality

In today’s marketplace, “AI” is slapped on everything from toothbrushes to thermostats. But most of these devices are just following scripts written by humans. The real leap happens when a system can learn, adapt, and invent—crossing the line from automation into what some call “alien intelligence.” This is where AI Technology becomes both powerful and unpredictable, and where the need for thoughtful AI Ethics and governance becomes urgent.

Table: What’s Really AI? Examples and Reality Check

| Example | AI or Automation? | Notes |

|---|---|---|

| Coffee Machine (pre-programmed) | Automation | Follows set instructions, no learning |

| Generative AI (e.g., ChatGPT) | AI | Creates new content, adapts to input |

| Automation Systems (e.g., factory robots) | Automation | Performs repetitive tasks, no adaptation |

| Millions of “smart” devices | Mostly Automation | Labeled as AI, but rarely meets true definition |

| Buffy (hypothetical new drink) | AI | Invented by AI, not pre-programmed |

Drawing these contrasts helps clarify widespread misconceptions about Artificial Intelligence. The line between pre-set behavior and true learning or adaptation is crucial—not just for techies, but for anyone concerned about how we govern and accept new technologies in our daily lives.

History Lessons: Information Revolutions That Changed Everything

If you want to truly grasp the AI Impact on our world, you need to look back—way back. As Yuval Noah Harari puts it,

‘To understand what is really new and important about AI, we need the perspective of thousands of years.’

(7.38-7.41). Each major leap in how humans share information has upended societies, redrawn lines of power, and forced us to rethink what it means to be part of a community. The story of AI Technology is just the latest chapter in a much older saga.

From Mud Tablets to Modern Networks: The Power of Small Tweaks

Let’s start with something as simple as writing. The invention of writing wasn’t just about scratching symbols into clay; it was about creating a new information environment. Even the choice of material—mud, for example—had ripple effects that lasted centuries (7.44-7.49). Suddenly, ideas could be stored, shared, and built upon. This wasn’t just a tool; it was a revolution in how people organized themselves and remembered their past.

Small tweaks, like switching from oral storytelling to written records, unleashed aftershocks that shaped entire civilizations. The printing press, centuries later, did something similar. It made information cheap, fast, and accessible. But, as research shows, these shifts didn’t just change what people knew—they changed who held power and how that power was exercised.

Democracy’s Survival: Bound to the Technologies of Sharing

You might think democracy is just about voting and laws, but it’s always been inseparable from the tech that lets people talk, argue, and decide together. Before newspapers or radio, only tiny city-states like Athens and Rome could manage democracy (7.49-7.52). Why? Because without the right information networks, it was impossible to keep everyone informed and engaged. Scale needed technology.

As societies grew, so did the need for better ways to share information. The rise of the printing press and, later, radio and television, made it possible for larger populations to participate in public life. These innovations didn’t just help democracy—they made it possible on a bigger scale. Studies indicate that Democracy Survival has always depended on the ability to communicate ideas widely and reliably.

Information Revolutions: Not Just Tools, But Foundations

It’s tempting to see new tech as just another tool in the box. But history tells a different story. Every major information revolution—from writing to the printing press to broadcast media—has reshaped the very basis of social order. These weren’t just upgrades; they were foundational shifts that changed what it meant to be a citizen, a leader, or even a human being.

Take the 14th century, for example. English armies invading France relied on old strategies for logistics and communication. But as soon as new information technologies emerged, military and political power shifted dramatically. Over thousands of years, these networks paved the way for modern governance, showing that AI Technology is just the latest in a long line of disruptive innovations.

AI: The First Technology with an Agenda

Here’s where things get truly different. Unlike the printing press or radio, AI doesn’t just pass along your ideas—it can create its own (7.56-8.16). A printing press could print your book, but it couldn’t write it. An atom bomb could destroy a city, but it couldn’t decide which city to target or why (8.16-8.30). AI, for the first time in human history, can make decisions and generate new ideas independently.

This changes everything. The AI Impact isn’t just about speed or scale. It’s about agency. AI can pick what to write, what to amplify, and even what to suppress. That’s not just a new tool; it’s a new kind of actor in the information environment. As research highlights, this makes AI singular—an active creator and decider, not just a messenger.

Who Holds Power, and How?

Every information revolution has redefined who gets power, how they get it, and what happens next. With AI, the stakes are even higher. It doesn’t just facilitate decisions—it makes them. And that means the future of Democracy Survival may depend on how we manage this new, unpredictable force.

So, as you navigate this fractured human conversation, remember: the tools we use to share information have always shaped our societies. With AI, we’re entering uncharted territory—one where the technology itself has a say in the conversation.

Wild Card: If AI Could Vote, Would It Choose Us? (A Humorous but Unsettling Hypothetical)

Let’s imagine a future where artificial intelligence—yes, the same technology that recommends your next binge-watch or corrects your spelling—gets a seat at the democratic table. Picture this: election day rolls around, and your coffee machine is not just brewing your morning espresso, but also casting a vote. Would it campaign on “free espresso for all”? Or perhaps your fridge, tired of being ignored, would lobby for “mandatory midnight snacks.” It’s a playful scenario, but beneath the humor lies a serious question about AI Ethics, AI Governance, and the very survival of democracy as we know it.

Currently, the number of AIs eligible to vote is a solid zero. But the idea isn’t as far-fetched as it sounds. As Yuval Noah Harari points out, we’re already living in a world where algorithms—those invisible bureaucrats—shape what you see, hear, and even believe. These systems don’t just follow orders; they learn, adapt, and sometimes surprise even their creators. If you gave them a ballot, what would they prioritize? Efficiency over empathy? Optimization over tradition? Or perhaps, as research shows, something entirely alien to human values?

What Would AI Value in a Democracy?

Think about it: if AI systems could vote, their campaign promises might look very different from those of human politicians. Instead of pledges about healthcare or education, you might see platforms focused on “maximizing network efficiency” or “reducing cognitive dissonance by 40%.” Hundreds of possible campaign promises could emerge, all rooted in logic, optimization, and data—not emotion or tradition.

It’s easy to laugh at the idea of your smart toaster running for school board, but the underlying issue is no joke. When the logic of democracy is no longer human-centric, what happens to the values that have always guided our political lives? If our own systems don’t understand us, have we become strangers in our own democracy?

From Human Conversation to Algorithmic Decision-Making

Democracy, at its core, is a conversation—a messy, passionate, sometimes irrational exchange of ideas. Harari reminds us that this conversation has always depended on the information networks of the day, from clay tablets to social media. But as algorithms increasingly mediate these conversations, the gap between human values and AI logic grows wider. Studies indicate that this gap matters deeply: AI’s impact on democracy isn’t just about efficiency or speed; it’s about whose priorities shape our collective future.

Consider the rise of “bureaucracies of AI,” where decisions in banks, governments, and even hospitals are made by systems that few humans truly understand. If these systems had a vote, would they choose policies that favor human flourishing, or would they double down on optimization, even if it means sacrificing nuance, empathy, or tradition? The risk, as experts in AI Governance warn, is that we might end up with a democracy that serves the logic of machines rather than the needs of people.

Humor as a Lens, Not a Shield

It’s tempting to use humor to defuse the anxiety these questions provoke. After all, the image of AI writing campaign speeches or debating school lunch policy is both hilarious and unsettling. But humor also helps us approach these issues without feeling overwhelmed. By imagining a world where AI votes, you’re forced to confront the very real possibility that our systems might soon make decisions for us—without us.

Research on AI Impact and Democracy Survival shows that the integration of AI into political systems is already raising questions about agency, rights, and values. Disinformation, algorithmic bias, and the amplification of division are not just technical problems—they are existential challenges for democracy. If we don’t address the gap between human priorities and AI logic, we risk becoming passive observers in our own political lives.

So, next time your coffee machine beeps, maybe ask yourself: if it could vote, would it choose you?

Conclusion: Our Next Big Choice—Will We Master AI, or Will It Master Us?

As you reach this point in our exploration, it’s natural to feel a mix of emotions—maybe a little fear, maybe a spark of inspiration. That’s the reality of living in a world where artificial intelligence is no longer science fiction, but a force shaping our daily lives and the very foundation of democracy. The question isn’t just about what AI can do, but about what we, as individuals and as a society, choose to do with it. Will we guide AI, or let it guide us?

Let’s pause for a moment on something simple, yet profound: human connection. In the transcript, there’s a moment of genuine gratitude—“we’ve just hit 7 million subscribers on YouTube and I want to say a huge thank you to all of you that show up here every Monday and Thursday to watch our conversations” (1.42-1.50). That’s not just a number. It’s a symbol of the power of collective human attention, of millions choosing to engage, to listen, and to participate in a conversation. In a world where algorithms can amplify or silence voices, your choice to show up matters more than ever.

Research shows that the survival of democracy in the age of AI depends less on the technology itself and more on the choices made by those who build, use, and govern it. The risks—digital authoritarianism, AI-generated disinformation, automated decision-making—are real and growing. Studies indicate that AI can be used to manipulate information, disrupt elections, and even erode trust in public institutions. But these dangers don’t arise from AI in a vacuum. They come from human decisions: how we design systems, what data we feed them, and what values we encode into their algorithms.

This is where AI Ethics and AI Governance come into play. It’s not enough to marvel at what AI can do; we must also ask how it should be used. Transparent, ethical governance is essential if we want to prevent digital authoritarianism and protect the heart of democracy. That means demanding accountability from tech companies, supporting regulations that put people first, and insisting on oversight that keeps the human element at the center of every decision.

But there’s another side to this story—one that’s easy to overlook in the rush of headlines and hype. Democracy’s health will likely be measured by our ability to keep the conversation truly human—imperfect, flawed, but real. As Yuval Noah Harari reminds us,

“Democracy is a conversation.”

It’s not about perfect answers or flawless logic. It’s about the messy, sometimes awkward, always vital act of talking, listening, and imagining together.

So here’s a personal challenge: Next time your WiFi goes out, or your phone battery dies, try a real conversation. Not a tweet, not a comment, but a face-to-face exchange. You might be surprised at how powerful that feels—how much more real and unpredictable it is than anything an algorithm can generate. In those moments, you’ll see that we’re still more powerful than the machines.

The future of democracy and technology will be won or lost not in algorithms, but in our willingness to talk, listen, and imagine together what comes next. Every time you engage in a real conversation, every time you demand ethical AI governance, every time you choose to participate rather than just consume, you’re casting a vote for the kind of world you want to live in.

Let’s not hand over our future without a fight. Let’s keep the conversation human, imperfect, and alive. Because in the end, democracy survival depends on it.

TL;DR: Artificial intelligence is not just changing technology—it’s reshaping democracy, amplifying polarization, and raising urgent questions about who (or what) runs the world. Our choices—not just our inventions—will determine whether AI leads us to new heights or tests the very foundations of society.

A big shoutout to The Diary Of A CEO for the valuable content! Take a look here: https://youtu.be/78YN1e8UXdM?si=_1y9fFAqRQ5gufMQ.